Meet the Maker: Robotics Student Rolls Out Autonomous Wheelchair With NVIDIA Jetson

With the help of AI, robots, tractors and baby strollers — even skate parks — are becoming autonomous. One developer, Kabilan KB, is bringing autonomous-navigation capabilities to wheelchairs, which could help improve mobility for people with disabilities.

The undergraduate from the Karunya Institute of Technology and Sciences in Coimbatore, India, is powering his autonomous wheelchair project using the NVIDIA Jetson platform for edge AI and robotics.

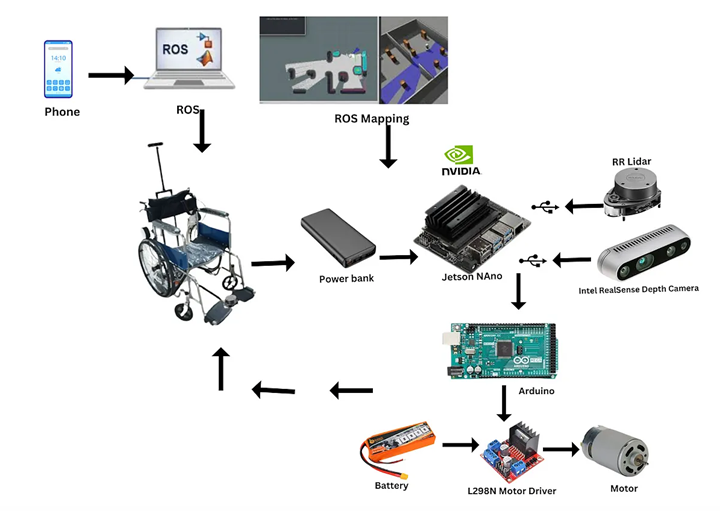

The autonomous motorized wheelchair is connected to depth and lidar sensors — along with USB cameras — which allow it to perceive the environment and plan an obstacle-free path toward a user’s desired destination.

“A person using the motorized wheelchair could provide the location they need to move to, which would already be programmed in the autonomous navigation system or path-planned with assigned numerical values,” KB said. “For example, they could press ‘one’ for the kitchen or ‘two’ for the bedroom, and the autonomous wheelchair will take them there.”

An NVIDIA Jetson Nano Developer Kit processes data from the cameras and sensors in real time. It then uses deep learning-based computer vision models to detect obstacles in the environment.

The developer kit acts as the brain of the autonomous system — generating a 2D map of its surroundings to plan a collision-free path to the destination — and sends updated signals to the motorized wheelchair to help ensure safe navigation along the way.

About the Maker

KB, who has a background in mechanical engineering, became fascinated with AI and robotics during the pandemic, when he spent his free time searching up educational YouTube videos on the topics.

He’s now working toward a bachelor’s degree in robotics and automation at the Karunya Institute of Technology and Sciences and aspires to one day launch a robotics startup.

KB, a self-described supporter of self-education, has also received several certifications from the NVIDIA Deep Learning Institute, including “Building Video AI Applications at the Edge on Jetson Nano” and “Develop, Customize and Publish in Omniverse With Extensions.”

Once he learned the basics of robotics, he began experimenting with simulation in NVIDIA Omniverse, a platform for building and operating 3D tools and applications based on the OpenUSD framework.

“Using Omniverse for simulation, I don’t need to invest heavily in prototyping models for my robots, because I can use synthetic data generation instead,” he said. “It’s the software of the future.”

His Inspiration

With this latest NVIDIA Jetson project, KB aimed to create a device that could be helpful for his cousin, who has a mobility disorder, and other people with disabilities who might not be able to control a manual or motorized wheelchair.

“Sometimes, people don’t have the money to buy an electric wheelchair,” KB said. “In India, only upper- and middle-class people can afford them, so I decided to use the most basic type of motorized wheelchair available and connect it to the Jetson to make it autonomous.”

The personal project was funded by the Program in Global Surgery and Social Change, which is jointly positioned under the Boston Children’s Hospital and Harvard Medical School.

His Jetson Project

After purchasing the basic motorized wheelchair, KB connected its motor hub with the NVIDIA Jetson Nano and lidar and depth cameras.

He trained the AI algorithms for the autonomous wheelchair using YOLO object detection on the Jetson Nano, as well as the Robot Operating System, or ROS, a popular software for building robotics applications.

The wheelchair can tap these algorithms to perceive and map its environment and plan a collision-free path.

“The NVIDIA Jetson Nano’s real-time processing speed prevents delays or lags for the user,” said KB, who’s been working on the project’s prototype since June. The developer dives into the technical components of the autonomous wheelchair on his blog.

Looking forward, he envisions his project could be expanded to allow users to control a wheelchair using brain signals from electroencephalograms, or EEGs, that are connected to machine learning algorithms. The latest NVIDIA Jetson Orin modules for edge AI and the NVIDIA Isaac platform for robotics could play a role.

“I want to make a product that would let a person with a full mobility disorder control their wheelchair by simply thinking, ‘I want to go there,’” KB said.

Learn more about the NVIDIA Jetson platform.

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product